What is Retrieval-Augmented Generation (RAG) in AI & How It Works

Retrieval-Augmented Generation (RAG) is changing the game in AI by making language models smarter, faster, and more accurate. In this blog, you'll learn what RAG is, how it works, and why it's the go-to solution for building real-time, reliable AI assistants in 2025.

Imagine you're taking a school test and forget an important fact. You could try to guess (which might go wrong), or you could quickly look up the answer in your notebook and give the correct one. That's basically what Retrieval-Augmented Generation (RAG) does for Artificial Intelligence (AI). Instead of just guessing from what it "remembers," it looks up real facts before giving an answer.

Let’s break it down in the simplest way possible, with real examples, simple comparisons, and a fun, friendly tone. By the end, you will say, “Oh, I get what RAG is now!”

Why Are People Talking About RAG in AI?

Modern AI tools, especially LLMs (Large Language Models), are super smart. They can talk like humans, write poems, even help with homework. But here’s the catch:

“Sometimes, even smart AI can make up stuff. This is called hallucination.”

These AIs don’t always know the latest facts. For example, if you ask a regular LLM about the score of a cricket match that happened yesterday, it might not know. That’s where RAG steps in like a superhero.

So, What is Retrieval-Augmented Generation?

RAG is a smart method that improves how AI answers questions. Instead of just using what it has learned during training, RAG retrieves information from real-time sources and then generates an answer using that information.

RAG = Remember less, Google more!

It combines two powers:

- Retrieval: Finds useful information from a database or knowledge base (like notes or the internet).

- Generation: Uses that info to write or say something smart.

To dive deeper into RAG-compatible tech stacks, check out our guide on How to Build an AI Chatbot Using OpenAI and Streamlit.

Real-Life Example: RAG vs Regular LLM

Question: Who won the Best Actor Oscar in 2024?

- A regular LLM trained till 2023 might guess: "Brendan Fraser for The Whale."

- A RAG-powered model will search online or in an updated database and answer: "Cillian Murphy won Best Actor at the 2024 Oscars for Oppenheimer."

Why is RAG Important?

Let’s say you're building:

- A legal assistant bot

- A customer service chatbot

- A healthcare support tool

- A compliance automation system

Would you want the AI to make up answers or give real, correct info?

RAG helps AI:

- Stay up to date

- Be more accurate

- Reduce wrong answers

- Use less memory (since it doesn’t have to remember everything)

How Does RAG Actually Work? (With a Fun Analogy)

Imagine your brain is a chef. You get an order: "Make lasagna."

- Without RAG: The chef tries to remember the recipe and cooks from memory.

- With RAG: The chef checks a recipe book, grabs ingredients, and follows the steps.

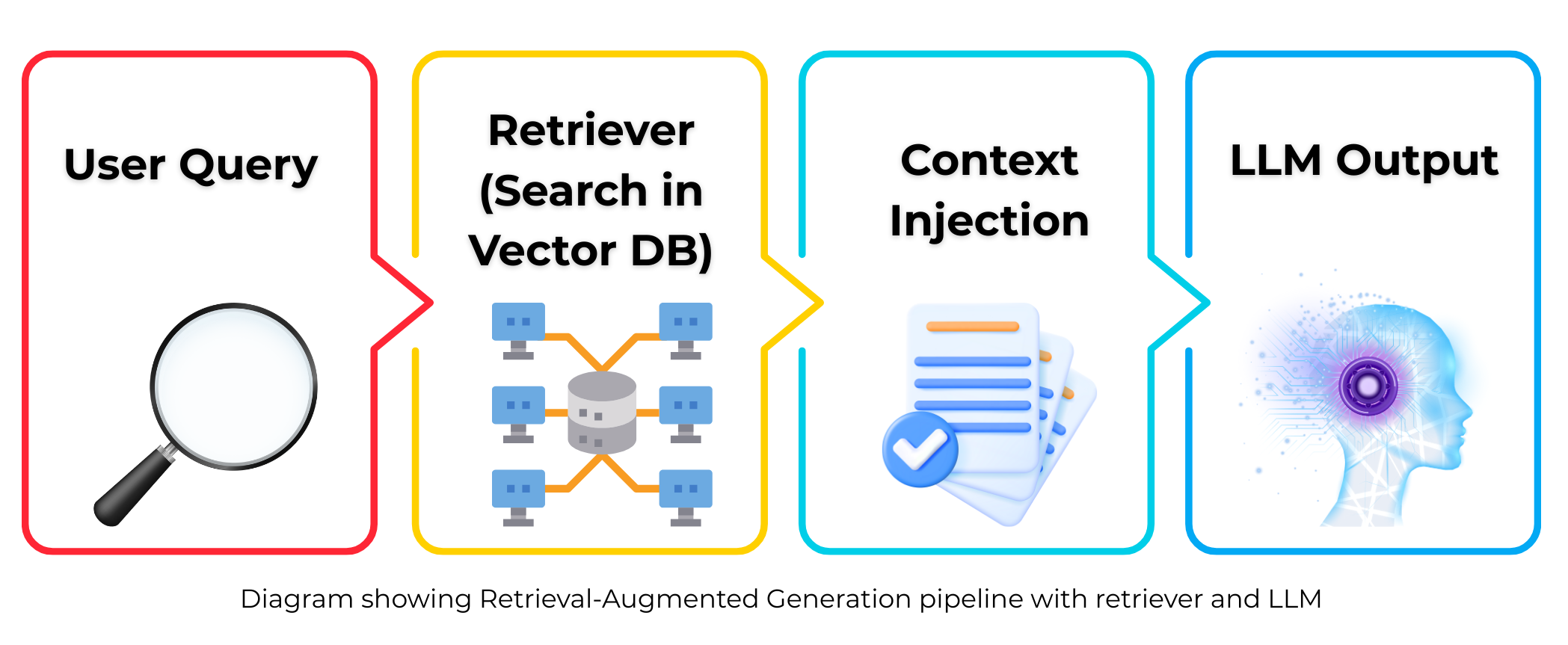

Step-by-Step Breakdown of RAG

- User Asks a Question: “What are the latest GDPR compliance rules in 2025?”

- Retrieval Module Gets to Work: Searches a knowledge base like Weaviate, Pinecone, or FAISS and finds context.

- LLM Reads This Info: Adds relevant data to the prompt as context.

- LLM Generates Answer: Returns a factually correct, up-to-date response.

Tools Used in a RAG Stack (With Quick Notes)

- OpenAI / HuggingFace: LLMs that generate answers

- Weaviate / Pinecone / FAISS: Vector databases

- LangChain / LlamaIndex: Frameworks for orchestration

- FastAPI: Great for building fast RAG apps

How is RAG Different from Fine-Tuning?

| Feature | Fine-Tuning | RAG |

|---|---|---|

| Cost | High (needs GPU & training time) | Low (just add new data!) |

| Time to Update | Slow | Fast |

| Flexibility | Needs retraining for new info | Just update the database |

| Accuracy | Depends on training data | Uses up-to-date facts |

When Should You Use RAG?

- Your info changes often

- You want real-time or recent answers

- You need factual accuracy

- You want to reduce costs of retraining

Use cases include compliance tools, AI customer support, and smart internal search.

Some Quick Fun Examples

1. Travel Assistant: “What’s the weather in Paris today?”

RAG AI: “Today in Paris, it’s sunny and 18°C.”

2. Medical Query: “What’s the latest COVID-19 protocol in Canada?”

RAG AI: Pulls data from government sites and answers accurately.

3. Coding Help: “What’s the best way to write a FastAPI GET endpoint?”

RAG AI: Shows updated code samples.

Frequently Asked Questions

What is Retrieval-Augmented Generation (RAG) in simple terms?

RAG is a method in AI where the system first searches for relevant facts from external data sources (like a database or documents) and then uses that information to generate accurate answers. It makes AI smarter by letting it “look things up” instead of guessing.

How is RAG different from traditional language models like GPT?

Traditional language models answer questions based on what they've learned during training. RAG adds a retrieval step — it searches for real-time or external information before responding, making the output more accurate and up-to-date.

Can I use RAG with OpenAI or ChatGPT models?

Yes, absolutely! RAG works beautifully with OpenAI models like GPT-3.5 or GPT-4. Many developers use LangChain or LlamaIndex to build RAG pipelines using these models.

Do I need to fine-tune the model to implement RAG?

No. That’s one of the biggest advantages of RAG. You don’t need to fine-tune the model. Instead, you just manage the knowledge base it pulls from — making updates super fast and cost-effective.

What types of data can RAG retrieve from?

RAG can pull context from PDFs and Word Docs, Notion or Confluence pages, SQL and NoSQL databases, websites, APIs, CSVs, and vector databases like Weaviate and Pinecone.

Is RAG suitable for compliance or legal tech apps?

Yes! RAG is perfect for compliance tools like GDPR or HIPAA assistants, where up-to-date and factual responses are essential. It reduces the risk of hallucination in legal contexts.

What tech stack do I need to build a RAG app?

You’ll typically use an LLM (like OpenAI or HuggingFace models), a retrieval layer (e.g., LangChain, LlamaIndex), a vector database (e.g., Weaviate, FAISS, Pinecone), and a backend framework (e.g., FastAPI).

Is RAG only useful for chatbots?

Not at all. While chatbots are a common use case, RAG is also used in document search tools, internal knowledge assistants, code copilots, research tools, and customer support AI.

Final Thoughts: Why RAG is the Future of Smart AI

AI is amazing, but when it teams up with real data, it becomes unstoppable. That’s the power of RAG. It’s like giving AI access to the world’s library before answering your questions.

“In a world full of guesses, RAG brings facts back to the table.”

If you're building a chatbot, AI copilot, or compliance tool, and you want it to be smart, reliable, and real-time — you need RAG in your stack.

Need help building a RAG-powered AI assistant? Contact Zestminds and we’ll build it together.

Let’s make AI smarter, one fact at a time!

Shivam Sharma

About the Author

With over 13 years of experience in software development, I am the Founder, Director, and CTO of Zestminds, an IT agency specializing in custom software solutions, AI innovation, and digital transformation. I lead a team of skilled engineers, helping businesses streamline processes, optimize performance, and achieve growth through scalable web and mobile applications, AI integration, and automation.